User talk:Ancheta Wis/t

Early life and academic career

[edit]He was born in Tui, Galicia, a part of the diocese of Braga, Portugal. He was baptized into the Catholic faith on 25 July 1551,[1] to António Sanches, also a physician, and Filipa de Sousa[2]. Being of Jewish origin, even if converted, he was legally considered a New Christian by the laws of Portugal and Spain at that time. In 1550, the French king Henry II granted letters patent to all New Christians who moved to a French city to start a business. The brother of António Sanches, Adam-Francisco Sanches, thereupon moved to Bordeaux, France; António Sanches followed the example of his brother by moving from Portugal to Bordeaux in 1562.

Francisco Sanches studied in Braga until he was 12 years old, when he and his parents escaped the surveillance of the Portuguese Inquisition. Sanches continued his studies at the College de Guyenne from 1562 to 1571.Cite error: A <ref> tag is missing the closing </ref> (see the help page). as the analog quantities.

Centrally, these analog systems work by creating electrical analogs of other systems, allowing users to predict behavior of the systems of interest by observing the electrical analogs.[3] The most useful of the analogies was the way the small-scale behavior could be represented with integral and differential equations, and could be thus used to solve those equations. An ingenious example of such a machine, using water as the analog quantity, was the water integrator built in 1928; an electrical example is the Mallock machine built in 1941. A planimeter is a device which does integrals, using distance as the analog quantity. Unlike modern digital computers, analog computers are not very flexible, and need to be rewired manually to switch them from working on one problem to another. Analog computers had an advantage over early digital computers in that they could be used to solve complex problems using behavioral analogues while the earliest attempts at digital computers were quite limited.

Some of the most widely deployed analog computers included devices for aiming weapons, such as the Norden bombsight[4] and the fire-control systems,[5] such as Arthur Pollen's Argo system for naval vessels. Some stayed in use for decades after World War II; the Mark I Fire Control Computer was deployed by the United States Navy on a variety of ships from destroyers to battleships. Other analog computers included the Heathkit EC-1, and the hydraulic MONIAC Computer which modeled econometric flows.[6]

The art of mechanical analog computing reached its zenith with the differential analyzer,[7] built by H. L. Hazen and Vannevar Bush at MIT starting in 1927, which in turn built on the mechanical integrators invented in 1876 by James Thomson and the torque amplifiers invented by H. W. Nieman. A dozen of these devices were built before their obsolescence was obvious; the most powerful was constructed at the University of Pennsylvania's Moore School of Electrical Engineering, where the ENIAC was built. Digital electronic computers like the ENIAC spelled the end for most analog computing machines, but hybrid analog computers, controlled by digital electronics, remained in substantial use into the 1950s and 1960s, and later in some specialized applications. But like all digital devices, the decimal precision of a digital device is a limitation, as compared to an analog device, in which the accuracy is a limitation.[8] As electronics progressed during the 20th century, its problems of operation at low voltages while maintaining high signal-to-noise ratios[9] were steadily addressed, as shown below, for a digital circuit is a specialized form of analog circuit, intended to operate at standardized settings (continuing in the same vein, logic gates can be realized as forms of digital circuits). By the time of the Space race to the Moon, the Apollo Guidance Computer was successfully constructed from 41 hundred NOR gates using the RTL logic family of integrated circuits. But as digital computers have become faster and use larger memory (for example, RAM or internal storage), they have almost entirely displaced analog computers. Computer programming, or coding, has arisen as another human profession.

While trying to track down a Stanovich 2007 citation, I found Stanovich, Keith E. (2007). How to Think Straight About Psychology. Boston: Pearson Education. I am not conversant with this author or his citation, so I commented out its sentences, and would appreciate it if another editor could verify the citation for the benefit of the article. --Ancheta Wis (talk) 02:47, 17 April 2011 (UTC)

But in the meantime, I found that some citations from Thomas Brody, Imre Lakatos, and Goldhaber and Nieto have been commented out. These sources are perfectly good. To demonstrate this, I list relevant quotations and their citations, which have been commented out, for some reason. --Ancheta Wis (talk) 02:47, 17 April 2011 (UTC)

Some background:

- Ludwik Fleck (1935, translated into English 1979) Genesis and Development of a Scientific Fact University of Chicago Press ISBN 0-226-25325-2 Chapter Two:"Epistemological Conclusions from the Established History of a Concept" pp.20-41.

- Fleck is as important as Karl Popper (author of Logic of Scientific Discovery), in the estimation of Thomas Kuhn (author of Structure of Scientific Revolutions), who wrote the June 1976 foreword to the 1979 translation of Fleck; Fleck's concepts are complementary to Popper's views (even as these views evolved during Popper's lifetime). Fleck's starting point is that science is too large a subject for a single mind, and his thesis is that scientific progress depends on communities, which he styles thought collectives (Denkkollektiv). Fleck describes the development of the thinking style (Denkstil) of these communities. Fleck emphasizes that even the terminology in a scientific inquiry is rooted in the problem at hand, however poorly the terminology is formulated in the beginning. As the understanding of a problem is reformulated, its terminology stabilizes and usage in that scientific community promulgates and propagates the thought style of that specific community.

- Pages 27-8: "In the history of scientific knowledge, no formal relation of logic exists between conceptions and evidence. Evidence conforms to conceptions just as often as conceptions conform to evidence. After all, conceptions are not logical systems, no matter how much they aspire to that status. They are stylized units which either develop or atrophy just as they are or merge with their proofs into others."

- Thomas Brody, The Philosophy Behind Physics Springer-Verlag p.45: "scientific theories evolve and change" (Even between the revolutionary phases posited by Kuhn)

- Imre Lakatos, Proofs and Refutations Cambridge University Press p.126: "Epsilon: really, Lambda, your unquenchable thirst of certainty is becoming tiresome! How many times do I have to tell you that we know nothing for certain?"

- Alfred Scharff Goldhaber and Michael Martin Nieto (23 March 2010) "Photon and graviton mass limits" pp. 939-979 Reviews of Modern Physics 82 January-March 2010: pp 940-1 -- "The canonical view of theory testing is that one tries to falsify theory. ... [A] theory can earn trust in three ways ... First, a striking, even implausible prediction is borne out by experiment or observation ... [Second], people see ways to apply the idea in other contexts. ... [Third] if many closely related subjects are described by connecting theoretical concepts, then the theoretical structure acquires a robustness which makes it increasingly hard - though certainly never impossible - to overturn."

One of the comments hidden in the article cites Kierkegaard without further information, apparently the basis for commenting out the citations. --Ancheta Wis (talk) 02:47, 17 April 2011 (UTC)

- Andrew Lancaster, rather than add another flavor of the myriad philosophical positions, it may be helpful to use the guidelines of summary style. So a table, showing the genetic relationship of the philosophical positions, might replace the large contribution we are currently enjoying from rtc. In its stead, the summary style guidelines might serve as the rules of engagement for futher enlargement of the section. As the summary style guidelines state, as the main article grows organically (ala rtc's contribution), subpages are spawned from it, replaced by summaries. Perhaps we might take advantage of the cross-cutting style which allows us to contrast the current widely-ranging statements side-by-side at a 50,000 foot view. Then hyperlinks can lead interested readers to the subpages.

- My difficulty with more text on positivism is that it forked from empiricism, beginning in the 1920s, and more or less died in the 1940s along with Otto Neurath's contribution to the failed International Encyclopedia of Unified Science. Neurath's monograph was a companion to Kuhn's Structure of scientific revolutions. The entire project never went anywhere; Kuhn was definitely a success, in contrast to positivism.

- Perhaps Popper's categorical statements on theory are meant to apply to philosophical theory rather than scientific theory. Popper is actually understandable if this interpretation is taken, as scientists like Feynman were actively antiphilosophical. --Ancheta Wis (talk) 21:15, 14 January 2011 (UTC)

Peirce's triads

[edit]Tetrast, while sorting through quotidian items, I noticed that Peirce's triads have an unexpected utility for me. It may logical to posit a binary proposition P, but P may or may not fit the real world: P, not P, and '?huh?' which Sowa alludes to in 'Thirdness' (see the link above). The size of a third category is a commentary about the fitness of P to be a proposition about the real world. In other words, P may or may not be a worthy hypothesis for consideration. A third category allows room for doubt, which of course is the beginning of knowledge for Peirce. --Ancheta Wis (talk) 14:45, 17 October 2010 (UTC)

Possible directions for development

[edit]I was actually working on ARM-related points when the merge tag was added; currently the ARM-based OSs allow for faster boot times, for event processing that was specialized for fingertip events, and for increased battery life. The legacy OSs basically ignored these design decisions and it will be some time before they are added. Windows CE allowed for ARM processors, but apparently this was incompatible with Windows as it then stood (as of January 2010). Now that MS has seen the consequences of the legacy decisions, MS has purchased an ARM architecture license to redress this. Again it will take time for the rework to be completed. See the Windows 8 citation in the article (i.e., release in 2012). Event processing is one area where QNX has a competitive advantage. --Ancheta Wis (talk) 01:18, 8 October 2010 (UTC) --edited Ancheta Wis (talk) 12:34, 11 October 2010 (UTC)

In retrospect, the division between the camps is desktop legacy versus untethered target systems for deployment. Since desktop is one billion PCs, apparently this outweighed the small team for tablets. Witness the cancellation of WinCE-based Microsoft Courier project. It remains to be seen how much the proof of concept OS kernel MinWin will get into the reworked OSs for tablets. There may not be enough development time to outweigh the competitive advantage of getting to market in enough time to make a difference with the competition, which is not static, but also changing. Witness Motorola, which could not afford the time required for MS to overcome the legacy software inertia. --Ancheta Wis (talk) 01:29, 8 October 2010 (UTC)

There were other concepts which MS scrubbed. For the fundamentals which Apple understands, see Don Norman, Design of Everyday Things. As you can see, this went beyond the classic PC concept several decades ago. See especially Don Norman's '7 stages of action' in the pdf. --Ancheta Wis (talk) 03:15, 8 October 2010 (UTC)

- It appears that the designer behind the Windows Phone 7 UX (user experience -- he apparently started tiles, which are user-definable icons -- and hubs, which aggregate apps) talked in Feb 2010 --Ancheta Wis (talk) 11:03, 11 October 2010 (UTC)

While the merge discussion is proceeding, I propose to continue my search for citations in the direction outlined above. OK? --Ancheta Wis (talk) 14:56, 8 October 2010 (UTC)

Computer program linking and loading

[edit]The personal computer model obeys the time-honored model of computer program development begun in the 1940s. I here argue that a tablet computer has forced upon us a retail/wholesale divide in that model. Specifically the program load step in computer program development needs to be examined in more detail to show that these steps still exist in tablet computer program development. From a programmer's POV, program load is unchanged for either tablets or PCs. Namely, that a program loader deploys the bytes of an executable file from a storage area (whether it be Solid State Disk, USB external drive, Read-Only Memory, or other source of data) in order, onto program memory to be consumed in a fetch-execute cycle.

How does this traditional (i.e., PC) load process differ from program load on a tablet computer? First of all, as Vyx has steadfastly reminded us, a tablet computer executes a program which is not wholly under the control of a single mind, in principle. A vendor of a tablet holds a retail key which, unless delegated to a programmer, controls program load. This retail key has been implemented in different ways by different vendors, who seem to have evolved their respective implementations in response to their individual urges to survive as entities. What I mean is that Apple's retail key to program 'load and go' (an IBM buzz-phrase which I coopt) is membership in their App Store, which Vyx has kindly reminded us costs a programmer $$$. Google's retail key is more distributed, but even program membership in Android Market is not automatic, but requires their permission. The MS retail key is somewhat intermediate, $$ for membership, etc.

What does this loss of wholesale freedom to an individual programmer buy us (the general non-programming public)? I think of it as the difference between the electric starter and the hand-cranked starter for an automobile. With this invention, women could insert a retail key into an ignition, start cars and drive autonomously. In other words, tablets are not just for programmers to do with as we please, but are tools and appliances for us to use conveniently on a daily basis. They just happen to be computers which will cannibalize the existing market for all programmers, but also liberate the rest of us when computing tools free us from having to remember dates, times, dollars, appointments, faces, tunes, etc. thereby making us collectively smarter.

But for the editors who ask "... and your point is?" I am asking that we lay down our arms and work on the article.

If the response is "but there will be a tablet vendor who will make it completely open." my response is that the existing vendors are attempting to maintain a critical mass which is observably larger than one enabled by the open model. It is observably true that a retail component is needed to make them succeed. It is observably true that a completely open enterprise will not be profitable in the short term. I agree that this statement remains to be proven. But we will not know for several years. I predict that this statement will be shown to be true. --Ancheta Wis (talk) 03:04, 13 October 2010 (UTC)

, Chinese: rén, Korean: in, Japanese: hito, nin; jin). The character has two strokes, the first shown here in dark, and the second in red. The black area represents the starting position of the writing instrument.

, Chinese: rén, Korean: in, Japanese: hito, nin; jin). The character has two strokes, the first shown here in dark, and the second in red. The black area represents the starting position of the writing instrument..

- ML, as an American, I see similarities between Lee Kuan Yew and Abraham Lincoln. Both were attempting to save their polities, one forced by history to unite his polity into a city-state, the other forced by history to unite a confederation (think Switzerland) into a republic (think Roman Republic). If you see difficulties including issues like this in the article, that is more evidence that the studies of the humanities are not of the same type as the studies of the hard sciences. If the position is that these sets of topics enjoy the study of a common underlying type, then perhaps that common type might be included. I believe that is what Peirce was discussing in the bulleted lists from Tetrast. It may be too much to hope that someday the publications of some researcher will unite a formerly disparate mass of facts about the social sciences into theorems of a larger science, as the American Josiah Willard Gibbs did for chemical engineering. I can think of the writings of some candidates (which I may not disclose by the rules of Wikipedia). --Ancheta Wis (talk) 03:40, 7 October 2010 (UTC)

Kenosis, I have been thinking about how to phrase a lede (no, not the lede of this article) so that a 3rd grader could understand it. As I understand it, first and second graders can read sentences, add and subtract numbers, sit quietly, and play well with others. Please correct me if I am completely wrong. A third grader is learning how to multiply by rote, can compose grammatical sentences, and perhaps learn some facts about the world.

A seventh grader learns about state history, some algebra, some science, some geography, some science. The New York Times attempts to write articles at seventh grade level.

So here goes:

Science is about 'reliable knowledge' — things you know you can depend on about our world. People have been studying science for thousands of years — since before people knew how to write, when people only talked about things, and also showed each other how to do things, with pictures and paintings, with songs and dances, and with customs - things that your parents did, and their parents did before them, and their parent's parents did before them, and more, 100 sets of parent's parent's ..... (repeat 100 times) parents did before them. That was so long ago that the world was different then, with different animals, with different rivers, shorelines, and deserts, grasslands, and forests than we have today. Science is also about how things will be in a future time, say in a time when your children's children's .... (repeat 100 times) children will be, after you.

Can there be things that we know were true so long ago, and which will be true so long from now? Scientists, the people who figured out our science, say 'yes', because what we know all fits together. What we know is not about secrets, but is about what we can all know now.

Then why can't we just learn these true things, here, now? Why do we have to keep going to school? Because there is too much to know. The most complicated things we know are far more complicated than what we can remember — seven things at one time. Most of the time, we have to depend on each other to set up things so that we can do our jobs in the best way we know how — and each of us is different from the other people around us — with different talents and different weaknesses. So we go to school to learn what we do best, and to learn how best to depend on each other.

Most importantly, we need to learn how to learn, so we can keep learning for the rest of our lives.

Thousands of years ago

[edit]Our world had far fewer people. People mostly lived by hunting other animals and gathering plants. People could weave nets together to catch and hold what they wanted. People lived in small groups and only trusted those in their group.

Thousands of years from now

[edit]We cannot be absolutely certain, but we can make estimates:

Stable number of people

[edit]The world stabilized in year 2050 at 9.5 billion people, when industrialization reached all continents of the world.

Shrinking number of people

[edit]The world continued its lifestyle improvement, which required that our economic systems continued consolidation of capital.

Expanding number of people

[edit]People continued colonization of the unsettled portions of the world: the oceans, the deserts, the mountains, Antarctica, and under the sea.

Wobbling number of people

[edit]World systems will grapple with issues and the population will rise or fall with available capital.

In the article on scientific method, we were asked to include a quote from Imre Lakatos into the section for the relationship with mathematics; in the search for a suitable quote, I was drawn into study of Lakatos' Proofs and Refutations, which uses an example from George Pólya's Mathematics and Plausible Reasoning, the Euler characteristic. The Euler formula V − E + F = 2 originally applied to polyhedra, with vertices V, edges E, and faces F; in the century after publication, various counterexamples (monsters) were found and buried until it was understood that 2 was not always the result to be expected when the objects studied were not the classic Greek solids.

Before his untimely death, Lakatos came to view the search for proof as a vehicle for 'dominant theories' (such as logic, or statistics, or computer science etc). Science, of course, is itself a dominant theory.

(So far, the best quotation I have found was

- "Epsilon: Really Lambda, your unquenchable thirst for certainty is becoming tiresome! How many times do I have to tell you that we know nothing for certain? But your desire for certainty is making you raise very boring problems — and is blinding you to the interesting ones. — Proofs and Refutations p. 126

) -- Note Lakatos' technique of using characters with different voices, like Galileo in Two New Sciences, so that he can juggle POVs.

- @Eraserhead1, @Vyx, @Mahjongg, @Snottywong, Vyx asked me to contribute: There is at least one other issue. (Vyx, I would appreciate it that you please not interpolate comments into this contribution, until after my signature)

- 3: control of the target's system software, where the terms are defined in a debate which erupted on this page just after the iPad announcement. I am using Apple as the exemplar.

- To a consumer, the target system software is defined by the source of the target device, in this case, Apple.

- From Apple's POV, the iPad is an expression of Apple's intellectual property, which gives Apple a claim on the control of the iPad's system software, and to Apple, control of the vendors for its Application software market. It appears that from Vyx's POV, the intervening 'computer operator', for example, the guy in charge of inserting the punch card deck into the card reader of the mainframe, is the gatekeeper for the software being run on the mainframe in behalf of the programmers, and that the defining difference for a personal computer is that the punch card gatekeeper, the application programmer, and the user are one and the same person, as opposed to the mainframe case. Now for Apple, the gatekeeper is implemented by a whole ecology of software - what I am glibly calling the system software, but which in today's world is implemented by an IDE, a development host, a programming language, an operating system, and a deployment process to a target -- the iPad.

- Before the afore-mentioned debate, I did not appreciate that Apple has found a 'sweet spot' in the possible environments for developing software. What I mean is that if Apple retains control of the entire process all the way to the point of deployment, (ie. from a PC POV, all the way to the Windows Installer ), then it is possible to manage complexity in an appealing way. --Ancheta Wis (talk) 14:57, 17 September 2010 (UTC)

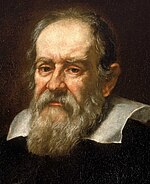

The following year, 1638, Galileo published his landmark experiments in Two New Sciences.[10]

- For a US-centric view -- In physics, try Reviews of Modern Physics; in general science, especially biology, and anything that would be of interest to all scientists, see Science (journal). You wouldn't go far afield to read the overview articles of the encyclopedia either. There is too much to cover, however, and it would be a disservice to the specialties to attempt a synoptic view of the state of the art of all the speculations, hypotheses and wild, currently discredited guesses that don't pop up in commentary until there is some sort of evidence for them. For example arXiv has everything that a suffiently brave researcher can throw at it; but one's reputation (and future) are on the line, in that case. --Ancheta Wis (talk) 17:56, 21 June 2010 (UTC)

Simplicity

[edit]I undid a good faith edit (as omitting the subtleties in the treatment of electron in material) on the following grounds:

- the thought processes are well known. They have been used to describe materials (e.g. gases, crystals to name the simplest cases) with decades of history (sometimes centuries of history).

- if we can't discuss simple cases first, but are constrained to the most general first, then we are ignoring the examples of Galileo, Newton, Bohr, Dirac etc.

- if we can't talk about something as simple as an electron, then we are ignoring items with literally centuries of background.

At its base, Physics can be simple. If there is something I have learned from my teachers, it is at least this. And that includes several Nobel laureates, who were quite clear about the need NOT to obfuscate what can be simple; to do otherwise, in fact is dishonest. --Ancheta Wis (talk) 01:52, 29 March 2010 (UTC)

I am trying to find a good article to point to from the history of computing hardware. As I am sure you all know, quantum computing elements rely on physical systems with a repeatable set of known states, regardless of the current value of each state. Hence spin up/down, cat dead/alive etc. In the Haskell (programming language), it is not even necessary to resolve a value until the result of a computation is required, by allowing each potential calculation to be wrapped up in chunks (or thunks), to be resolved only when it is absolutely necessary. Thus infinities can be neatly manipulated without fear of overflowing physical registers, in this kind of language.

Hence my request; I need an article which contains the point that there are physical systems which are well-known (i.e., not a muddle) and which ultimately resolve to real physical situations (spin up/down etc). Then in the computing hardware article, I can then simply reference the link.

It won't work to use the double-slit experiment because the presence/absence of diffraction is not a reliable-enough (ie. digital-enough) situation. It won't even work to use the quantum Hall effect because

- This might not be your setup. I recall seeing these symptoms years ago and dismissed them as database lag. Have you correlated these symptoms with the time of day, or with the times of database backup? (I admit I now have 2GB of memory and back then I had 1 GB or perhaps less, & I too upgraded to FF6, just now. So just because I see no side-effect, this is no guarantee of no FF bug somewhere) --Ancheta Wis (talk) 22:17, 15 March 2010 (UTC)

This phrase comes from Needham (2004) Science and Civilisation in China Vol 7 part 2, page 84, "China's Immanent Ethics": wei jen min fu wu! (為人民服務! In everything you do, let it be done for the people). The next sentences state "(in some future incarnation) ... I should pray for Chinese colleagues, the descendants of the sages, with their sense of justice and righteousness (liang hsin 良 )"

I notice that the characters from Needham are not the same as a very similar attribution to Mao. Doubtless Needham transcribed and translated this after hearing it verbally. --Ancheta Wis (talk) 01:34, 11 March 2010 (UTC)

Needham, Robinson & Huang 2004, p.218

Needham et al. 1986, p.x

- Needham, Joseph; Wang, Ling (王玲) (1954), Science and Civilisation in China, vol. 1 Introductory Orientations, Cambridge University Press

{{citation}}: Invalid|ref=harv(help) - Needham, Joseph; Wang, Ling (王玲) (1956), Science and Civilisation in China, vol. 2 History of Scientific Thought, Cambridge University Press

{{citation}}: Invalid|ref=harv(help) - Needham, Joseph; Wang, Ling (王玲) (1959), Science and Civilisation in China, vol. 3 Mathematics and the Sciences of the Heavens and the Earth, Cambridge University Press

{{citation}}: Invalid|ref=harv(help) - Needham, Joseph; Wang, Ling (王玲) (1962), Science and Civilisation in China, vol. 4 Physics and Physical Technology part 1 Physics, Cambridge University Press

{{citation}}: Invalid|ref=harv(help) - Needham, Joseph; Wang, Ling (王玲) (1965), Science and Civilisation in China, vol. 4 Physics and Physical Technology part 2 Mechanical Engineering, Cambridge University Press

{{citation}}: Invalid|ref=harv(help) - Needham, Joseph; Wang, Ling (王玲) (1971), Science and Civilisation in China, vol. 4 Physics and Physical Technology part 3 Civil Engineering, Cambridge University Press

{{citation}}: Invalid|ref=harv(help) - Needham, Joseph; Lu, Gwei-Djen (1974), Science and Civilisation in China, vol. 5 Chemistry and Chemical Technology part 2 Spagyrical Discovery and Invention: Magisteries of Gold and Immortality, Cambridge University Press

{{citation}}: Invalid|ref=harv(help) - Needham, Joseph; Ho, Ping-Yu; Lu, Gwei-Djen (1976), Science and Civilisation in China, vol. 5 Chemistry and Chemical Technology part 3 Spagyrical Discovery and Invention: Historical Survey, from Cinnabar Elixirs to Synthetic Insulin, Cambridge University Press

{{citation}}: Invalid|ref=harv(help) - Needham, Joseph; Lu, Gwei-Djen (1980), Science and Civilisation in China, vol. 5 Chemistry and Chemical Technology part 4 Spagyrical Discovery and Invention: Apparatus and Theory, Cambridge University Press

{{citation}}: Invalid|ref=harv(help) - Needham, Joseph; Lu, Gwei-Djen (1983), Science and Civilisation in China, vol. 5 Chemistry and Chemical Technology part 5 Spagyrical Discovery and Invention: Physiological Alchemy, Cambridge University Press

{{citation}}: Invalid|ref=harv(help) - Needham, Joseph; Tsien, Tsuen-Hsuin (1985), Science and Civilisation in China, vol. 5 Chemistry and Chemical Technology part 1 Paper and Printing, Cambridge University Press

{{citation}}: Invalid|ref=harv(help) - Needham, Joseph; Ho, Ping-Yu; Lu, Gwei-Djen; Wang, Ling (王玲) (1986), Science and Civilisation in China, vol. 5 Chemistry and Chemical Technology part 7 Military Technology: The Gunpowder Epic, Cambridge University Press

{{citation}}: Invalid|ref=harv(help) - Needham, Joseph; Lu, Gwei-Djen (1986), Science and Civilisation in China, vol. 6 Biology and Biological Technology part 1 Botany, Cambridge University Press

{{citation}}: Invalid|ref=harv(help) - Needham, Joseph (1988), Science and Civilisation in China, vol. 5 Chemistry and Chemical Technology part 9 Textile Technology: Spinning and Reeling, Cambridge University Press

{{citation}}:|first2=has numeric name (help);|first2=missing|last2=(help); Invalid|ref=harv(help) - Needham, Joseph; Bray, Francesca (1988), Science and Civilisation in China, vol. 6 Biology and Biological Technology part 2 Agriculture, Cambridge University Press

{{citation}}: Invalid|ref=harv(help) - Needham, Joseph; Yates, Robin D.S. (1994), Science and Civilisation in China, vol. 5 Chemistry and Chemical Technology part 6 Military Technology: Missiles and Sieges, Cambridge University Press

{{citation}}: Invalid|ref=harv(help) - Needham, Joseph; Harbsmeier, Christoph (1998), Science and Civilisation in China, vol. 7 The Social and Economic Background part 1 Language and Logic, Cambridge University Press

{{citation}}: Invalid|ref=harv(help) - Needham, Joseph; Robinson, Kenneth G.; Huang, Jen-Yu (2004), Science and Civilisation in China, vol. 7, part II General Conclusions and Reflections, Cambridge University Press

{{citation}}: Cite has empty unknown parameter:|1=(help); Invalid|ref=harv(help)

I would like to put in a little plug for Hueco Tanks in El Paso County, Texas about 30 miles NW on on Montana avenue from EP the city. There are Hopi-style masks painted on some of the cave walls but the modern Hopi who come to visit no longer can speak to their meaning. There are paintings of Corn Tassels (which would have given the sacred corn pollen) and Corn Flowers (which is an Aztec food ingredient). There are nopales (prickly pear) in abundance for the eating, to this day. There are paintings of Tlaloc and Quetzalcoatl. I put in the citations in the article. The people of EP feel kinship (Puebloan, Aztec, Apache, Kiowa (& perhaps Wichita?) Spanish, Anglo) with the markings on the cave walls. You can see the various ancestries in the populace today obviously.

Oh, did I mention the folsom point which was found at Hueco tanks? That says the region has been inhabited since 10-12 000 years ago, just about the time the megafauna went extinct. I know for a fact that a ground sloth was found in a lava tube at Aden crater, several miles north of Kilbourne hole and 60 miles west of Hueco tanks. That fits in nicely with the folsom point. --Ancheta Wis (talk) 20:13, 3 March 2010 (UTC)

Needham (1956), Science and Civilisation in China] vol 2 History of Scientific Thought 697pp, on page 173 discusses Mohist fa, from 4 parallel resources:

1) Than Chieh-Fu (ed.) Mo Ching I Chieh, Analysis of the Mohist Canon. Com Press (for Wuhan University), Shanghai, 1935. Needham abbreviates this source as Cs.

2) Fêng Yu-Lan, "Yuan Ju Mo" On the Origin of the Confucians and Mohists Chhing-Hua Hsüeh-Pao (Tsinghua University) Journal 1935 10 279.

3) Fêng Yu-Lan, '

- In case you need this, I always feel better when I hear Elmer Bernstein (1962), Theme from To Kill A Mockingbird. The reference to mockingbirds is that they hurt no man, and only sing to us. --Ancheta Wis (talk) 16:34, 19 February 2010 (UTC)

- Well, here is a disconnect. When I read the description before I was seeing it statically. But if your concept is a flow from two corners to the top, then this picture does not follow from the words which I read. Logically, there could also be a flow from genetic & personal to cultural. I would visualize this as a flow in another direction than from bottom to top. But thinking about it, I can envision the two bottom corners converging in the center and then flowing to the top. What this flow could symbolize might be an enculturated individual changing perception due to his/her personal experience. Other flows, say from top corner merging with genomic expression might symbolize the learning experiences of an individual, flowing to the culture corner, influencing his/her culture (a form of leadership). Yet another kind of flow might be top corner merging with culture, flowing to the genomic expression corner might symbolize gene flow (effect on marriage & family). --Ancheta Wis (talk) 20:05, 18 February 2010 (UTC)

- Well, I can't help the feeling of revulsion. It would be quite as revolting as eating a stew and finding monkey hand inside it (i.e., it would look like human baby hand).

- Nobel Memorial Laureate Herbert Simon, in his 1991 autobiography Models of My Life recounts his perception of sudden emotional coldness from his hosts at a dinner party, who were as urbane and understanding as he; the precipitating event was his positing a thought experiment, of raising a robot with artificial intelligence (which was dear to his heart, as one of the founders of the science) as a human child; his hosts reacted with coldness; Herbert Simon hastily back-pedaled. --Ancheta Wis (talk) 08:29, 13 February 2010 (UTC)

- Simon, p. 312 writes of "a gleam of understanding over my driver's face" when Simon posed a question about ethnicity in Peru.

- I think the point is to arose that gleam of understanding over the Internet without having the feedback of speaking face-to-face. --Ancheta Wis (talk) 08:54, 13 February 2010 (UTC)

- Simon, p. 152 writes how Elliott Dunlap Smith's role-plays (as teaching devices) indelibly taught how we reveal ourselves to others. In other words, we ourselves control how others will come to see us. --Ancheta Wis (talk) 09:03, 13 February 2010 (UTC)

Type (model theory) was recently removed from See also; I can see its use as a way to abstract the grammar of statements about types. In that sense, it is related to the other Type articles as a container or context. Some semantics (of client theories) can be documented this way. --Ancheta Wis (talk) 15:42, 12 February 2010 (UTC)

- Damir Ibrisimovic, it's different from Stanford Encyclopedia of Philosophy, or Citizendium. Wikipedia's tradition is that everyone is an administrator of the article, and everyone is editor of the article. No one is given any currency except that afforded by the immediate circle of editors of the current article under the searchlight. So if the article became too 'hot', the buzz would attract more editors in a positive feedback loop, until the cumulative changes fall of their own weight, and the article then sinks back into quiescence. But if an editor were to violate some policy, then the entire weight of the encyclopedia falls on the hapless editor and no one can save him. Thus editing the encyclopedia treads a fine line which depends entirely on the readership of that article.

- To all editors:

- I propose to archive a subset of the talk page as denoted above. If there are any specific talk threads which any editor wishes to leave un-archived, please respond here. I will wait one week. In the meantime, the talk page will continue to serve as a waystation for improvement of the article. --Ancheta Wis (talk) 23:17, 9 February 2010 (UTC)

When I came across this concept, I was confused: does the adjective 'Franco-' refer to a political entity, a temporary alliance, a pejorative, ... what? It appears to refer to a still-controversial topic or to a possible historical event which is yet to be proven.

The slant which disturbed me is that to me, 'Franco-' is not the same as 'Frankish-' or φράγκοι or Ferengi (in Hindustan). All of these appear to be names were was used to refer to the Franks, ie Frangistan, the land of the Franks, from an eastern POV, to what would possibly be referred to as France (That's what I associate with 'Franco-'). If that is so, then why is the article obscurely named? The Ferengi were the Crusaders of Christendom.

I now retreat to wait and read, but it is disturbing that the title of the article is Franco-Mongol alliance while the referents in the first paragraphs are denoted Franks. Why not call them Ferengi? Oh but wait, that name was hijacked by Roddenberry. --Ancheta Wis (talk) 13:18, 9 February 2010 (UTC)

- Your responses are revealing because they show that you have not considered that there can be computers without operating systems. The wildest one that has been mentioned to me was a two by four with notches in the corners to flip the rows of toggle switches, in order to start initial program load. I regret that I did not ask the name of the technician who thought of it. --Ancheta Wis (talk) 12:18, 1 February 2010 (UTC)

- I think it should be clear to you that in the future, when tablets are commonplace, that someone will use one to write a computer program which will be then executed by a blind CPU somewhere. OK? In fact people are doing that now on hosts which happen to be browsers for targets which also happen to be browsers (this alone shows that the programs need not be executed on a specified piece of hardware, such as a PC, because browsers just happen to be designed to be OS-independent). But that is not the purpose of the article which is on the verge of bursting forth out of this one. --Ancheta Wis (talk) 12:18, 1 February 2010 (UTC)

- To give an example which is not iPhone or Apple-centric: If there were some Android tablet (like the Barnes&Noble nook, for example) and some Android developer had already developed something (such as an astronomy application, to pull an example out of the air, such as the phase of the moon, for some vampire) to run on an HTC G1, for example, then all that developer would have to do to port his vampire's application to the nook would be to change 'size of the application display'. His targets were the G1 and the nook (the tablet computer) and his host development system would just have to be something that runs Java 6, Eclipse 3.5, and Android 2.1 (as we speak). His host could be a PC, a mac running an emulator, or a mainframe. To go even farther afield, his target could be an embedded system such as the chip on a greeting card starring Hoops and Yoyo or the chip on a smart card. --Ancheta Wis (talk) 04:30, 1 February 2010 (UTC)

- Yes, indeedee! By golly, I can even read, and I think am able to understand the English language! ..<<Dead silence here, followed by the tolling of faraway bells>>.. I also am not in the habit of ascribing or projecting the mental states of other sentient beings. According to this talk page, "Tablet PC" is trademarked by Microsoft. That alone justifies the page move, while we are on the subject of freedom. It even explains all the preemptive edits which systematically remove any references to iPad, now that I think about it. And no, I am not a mac person; I haven't even touched a mac in 21 years.

- According to this source, Microsoft Tablet PC software was available in 2003, but only to OEMs. How is that free and open?

- Here is a slate PC presentation from CES. Three Microsoft partners displayed. Pegatron showed a larger-screen display than Archos, who showed their personal media player under Windows 7. HP showed a slate running Kindle software which Ballmer held up and rolled a canned demo video. If we are talking openness and freedom, then why weren't the demos more 'live' than the iPad demo? What kind of POV is this? How free and unlimited is this? --Ancheta Wis (talk) 17:01, 31 January 2010 (UTC)

- Are you now supporting the notion of allowing iPad into this article? If not, then we ought to separate the redirect of tablet computer from this article, let the tablet computer article stand on its own, and put the category:tablet computer into the iPad article instead of the current category:tablet PC.

- Or might you support the notion of separating yet another article from this one: the slate PC. I see that it too is currently redirected to this very article. --Ancheta Wis (talk) 17:01, 31 January 2010 (UTC)

- I see that slate computer is currently a redirect to a section in this article. That section, which could conceivably encompass the iPad, is currently defining itself as a type of tablet PC. Will this change? --Ancheta Wis (talk) 17:01, 31 January 2010 (UTC)

- The HP Slate will be available sometime in 2010. According to this source, it was prototyped as an e-reader in the UK in 2005, and then improved from user feedback, and that the product could have been released 2 years ago for $1500, which is why HP has waited til now to demo it at CES. --Ancheta Wis (talk) 17:01, 31 January 2010 (UTC)

- It is only fair to point out that the category:tablet PC is not under discussion for the move. The presumption is that any eponymous category would be up for discussion in a different place than this page. But when a game-changer appears, it would be foolish to hold fast or ignore it. I should comment that Bill Gates predicted the success of the Tablet PC in 2001 with little result; it took a different set of players to create the ecosystem which enabled the imminent success of the Tablet computer.

- It may be more politic to separate the current redirect of Tablet computer to a separate article with a different agenda. The iPad article currently links to this one, which actually started the whole fracas.

- The action to sever the redirect would have the advantage of letting the editors of this article have their own space. The action to separate the names would allow another set of editors to create an entirely different one in peaceful coexistence (different article, different category, different class of device, as avowed by the current set of editors). Agreed?

- I propose to implement that change after the seven-day timer has expired for the current proposal.

In Haskell, "a function is a first-class citizen"[11] of the programming language. As a functional programming language, the primary control construct is the function; the language is rooted in the observations of Haskell Curry ( 1934, 1958, 1969) and his intellectual descendants, that "a proof is a program; the formula it proves is a type for the program".

- While searching for the requested quote I found this post in Proofs and Refutations:: "Many working mathematicians are puzzled about what proofs are for if they do not prove.... Applied mathematicians usually try to solve this dilemma by a shamefaced but firm belief that the proofs of the pure mathematicians are 'complete' and so really prove. Pure mathematicians, however, know better - they have such respect only for the complete proofs of logicians. [e.g. Hardy 1928]: 'There is strictly speaking no such thing as mathematical proof; we can, in the last analysis, do nothing but point; ... proofs are what Littlewood and I call gas, rhetorical flourishes designed to affect psychology, pictures on the board in the lecture, devices to stimulate the imagination of pupils.' ... G.Polya points out that proofs, even if incomplete, establish connections between mathematical facts and this helps us keep them in our memory: proofs yield a mnemotechnic system [1945]. - p.31- 32 BETA: Not 'guesswork' this time, but insight! TEACHER: I abhor your pretensions 'insight'. I respect conscious guessing, because it comes from the best human qualities: courage and modesty. - p.32 "

- First, I would like to thank the anon 93.86.x.y for leading me to Lakatos, Worrell and Zahar (1976), Proofs and Refutations: the logic of mathematical discovery Cambridge ISBN 0 521 21078 x Parameter error in {{ISBN}}: invalid character, which is a very pleasant read. I have found some candidate quotations which I am asking the editors of this article to select from:

- p.

- I like your changes, but I can't understand why "The monad acts as a framework" isn't part of the lede sentence instead of the familiar "abstract data type" phrase. It is too easy for a standard C++ programmer to read the familiar phrase and get completely misled, because the whole monad concept is post-imperative (read: it will take study and a commitment to learn a whole new set of concepts, first).

- For the historically minded, a Eugenio Moggi article would have helped. But it doesn't exist yet. I personally recommend Gordon's thesis Functional Programming and Input/Output for background.

- Since functions are the only control construct in functional programming,

- Marax, It may be helpful to reread about Bacon's idols. --Ancheta Wis (talk) 11:30, 23 October 2009 (UTC)

- And just to be clear, all I mean about induction is the standard viewpoint:

| Induction | Deduction | |

|---|---|---|

| 1 | Start from a case | Start from a general statement |

| 2 | Examine the particulars of that case | Give a specific example of the general, as a type |

| 3 | Repeat, next particular, find commonality | Explicate the type, in propositions |

| 4 | Generalize to the collection as a type | Give expected result for that type |

- As the type of anything is the heart of a scientific statement, it is a reflection of the judgement of the investigator. It selects what is important or crucial about the proposition.

- For example, in Newton's theory, a mass is a property of some object. In this case, the mass of that object allows a statement about any other object of the same type (e.g., mass points, rigid bodies, fluids etc.)

- As another example, a physician can be considered to be an expert in health issues. In this case, the expertise of the physician can be typed: good, expert, quack, etc.

- In Maxwell's theory, charge is the relevant base type.

- In finance, the cash position is the basic.

- My favorite is the private soldier in The Dirty Dozen, each of whom rehabilitate themselves, by personal heroism, as worthy of a general discharge as an ordinary soldier (as a type).

- Naturally, it is a conceptual error to confuse the general statement in deductive reasoning as a causal statement. For example "all physicians are experts".

- None of this is new. --Ancheta Wis (talk) 13:32, 2 October 2009 (UTC)

- On the face of it, the sentence "His methods of reasoning were later systematized by Mill's Methods" is an oversimplification. If it were true, then we would be able to find a citation. I propose commenting out the sentence until the originating editor can give a citation.

- --Ancheta Wis (talk) 03:36, 1 October 2009 (UTC)

--Ancheta Wis (talk) 23:19, 30 September 2009 (UTC)

- I have an idea: what if there were another section, say Reprise -

Proposed beginning for a new section: Reprise

[edit]"Truth is sought for its own sake ... Finding the truth is difficult, and the road to it is rough. For the truths are plunged in obscurity. ... God, however, has not preserved the scientist from error and has not safeguarded science from shortcomings and faults. If this had been the case, scientists would not have disagreed upon any point of science. ... It is not the person who studies the books of his predecessors and gives a free rein to his natural disposition to regard them favourably who is the real seeker after truth. But rather the person who is thinking about them is filled with doubts, who holds back with his judgement with respect to what they say, who follows proof and demonstration rather than the assertions of a man whose natural disposition is characterized by all kinds of defects and shortcomings. A person who studies scientific books with a view to knowing the truth ought to turn himself into a hostile critic of everything that he studies. ... And ... he should also be suspicious of himself ... If he takes this course, the truth will be revealed to him and the flaws in the writings of his predecessors will stand out clearly. ... " —Alhazen, (Ibn Al-Haytham) Critique of Ptolemy, translated by S. Pines, Actes X Congrès internationale d'histoire des sciences, Vol I Ithaca 1962, as referenced on p.139 of Sambursky, Shmuel (ed.) (1974), Physical Thought from the Presocratics to the Quantum Physicists, Pica Press, ISBN 0-87663-712-8{{citation}}:|first=has generic name (help)

- - perhaps retrospective claims, including criticism, and also impact, of scientific method, might be put in this proposed section.

- - perhaps this proposed section might be placed at the end of the article, just after the 'Relationship with mathematics' section. --Ancheta Wis (talk) 16:12, 24 September 2009 (UTC)

- The basic reason that diagnosis per se does not lead to scientific law is that it is part of an application of knowledge (here, medicine) and the mission of a physician/clinician is to seek a cure if possible.

- It's not the authority of the experts, it's their ability to separate what is meaningful from extraneous chaff; since they have worked in the field for so long or so extensively, what is meaningful is second nature to them. A demonstration follows:

- Science did not spring full-blown from the brow of any human being; it evolved from the needs of humanity, step by step. (I believe this is obvious, but the quotation from Alhazen below should prove it, because any controversy would not have arisen from any scientific effort, similarly to the action of angels ("If men were angels, we would have no need of government." —James Madison)), and there seems to be no lack of controversy when people congregate in large enough groups.

- Categorical statements, like that of Thales, document the beginning of the scientific impulse (meaning the desire to get at the root of things, their nature). Thales' specific hypothesis "All things rise from water" was disproven immediately by Anaximander, one of his students. This hypothesis is truly part of history of science because it was falsifiable.

- When civilizations like the Egyptians painstakingly accumulated cures for human diseases, as well as the diagnoses of diseases, as well as the prognoses for the respective diseases, this served as part of the foundation of knowledge, some of which is in use in medicine to this day.

- When subsequent civilizations, like the Greeks observed the successes and failure of their predecessors, they began to examine why. This too is part of the scientific enterprise and part of any standard history of science book.

- Once successes began to accumulate in an obvious mass, such as that evinced by Stevinus' experiment to drop weights from a height, or the rolling of balls down a ramp by Galileo, or the demonstration of the finite speed of light by Roemer, etc., then it became possible to start to categorize just what it takes to progress in science. It is not only philosophers of science, who study this, but also practicing scientists, for example, those who are experiencing a change of interest and who seek to return to their roots.

- The traditions of medicine are one thread in the tapestry leading to the rubric of science and those methods which got humankind to the successes of science. You already have SteveMcCluskey's citation. --Ancheta Wis (talk) 19:31, 21 September 2009 (UTC)

- My motivation is different; when I first edited Wikipedia, I was aided immensely by User:Jnc, an acknowledged expert in the internet who contributed to it in its early days: he was chased away by hostile treatment. Eventually the miscreant was detected and banned but the damage was done by then. Since that time I have resolved to myself that when I detect a 'person of substance' I will do my part in protecting that person from being chased away from the encyclopedia. --Ancheta Wis (talk) 21:13, 21 September 2009 (UTC)

- When googling "a method used by mathematicians, that of 'investigating from a hypothesis' " I found a commentary on Meno: Michael Cormack (2006) Plato's stepping stones: degrees of moral virtue --Ancheta Wis (talk) 01:02, 19 August 2009 (UTC)

συλλογισμὸς ἐξ ὑποθέσεως syllogism from hypothesis —Preceding unsigned comment added by 24.106.44.158 (talk) 01:31, 19 August 2009 (UTC)

- Motion has both spatial and temporal features in its description. The visual system of the brain has change-sensitive components; a saccade of the eye across a regular array of features would also be interpreted by the brain as a series of pulses, just as if one were to stimulate it with a pulse train; if one googles "tuned spatial filter" one can find some pertinent statements about vision. However this discussion is probably taking place on the wrong page. Spatial filtering was studied by television engineers like Albert Rose 60 years ago. On this page, one appears to be restricted to dealing with slices of brain being stimulated as if it were being fed images by a live eye. --Ancheta Wis (talk) 14:16, 1 August 2009 (UTC)

Now that Kenosis has explicitly placed Confirmation into Thomas Brody's 'epistemic cycle', at what stage is it? Is Confirmation at the end of a cycle, the middle, or a new beginning? Would Kuhn have called this simply part of 'normal science'?

I believe Confirmation to be akin to Victory in battle; that is, in the grander scheme, a victory simply ratifies an already existing condition in the hearts and minds of men, and a new set of forces can then arise. Or, as Kuhn puts it, a new paradigm has arisen. Just as the placement of the keystone in an arch is the last step in construction of the arch, which is then stable against collapse, so too, Confirmation is the end of a series of events; something has been settled, not just for the individual researcher who has made a discovery, but also some question has been settled in a larger community of researchers and allies who share something of the same vocabulary, definitions, processes, agreements, and allegiances. When viewed in a larger theater, a victory in battle then allows troop formations to be re-deployed, to fight again; in that sense, victory is elusive, as war in a larger theater can still be lost, and the confirmation step is but one in a larger epistemic cycle. Lastly, Confirmation is a new beginning in a larger understanding of the scheme of things.

A prototype for this is Newton's System of the World, in Book Three of Philosophiæ Naturalis Principia Mathematica. Newton's Laws have been confirmed, and the System of the World is subject to them. This was the viewpoint for two hundred years, at the end of which Einstein could ask himself 'what would the world look like if I were to ride on a beam of light?'. The answer, of course, overturned Newton's System of the World and a new world order arose, in another epistemic cycle.

In this light, I propose moving the example of the precession of the perihelion of Mercury to the Confirmation section, as signal that a new cycle, a new battle in a larger war, is looming, the end of which has not been fully determined, to this day. Although General Relativity is a victory in a smaller skirmish, it is an open question of when and how the keystone unifying relativity and quantum mechanics will be placed.

In the DNA example, which is a smaller one, compared to the unfinished structure in physics, Confirmation was the acceptance of the structure of DNA by the community of researchers. Crick and a series of other researchers could then devote their attention to the unravelling of the genetic code. In this viewpoint, the 'Transforming principle' discovered by Avery, MacLeod, and McCarty (1944) was finally accepted in the larger community of researchers, after the discovery of the structure of DNA (1953); a Nobel prize was never awarded to Avery.

To follow up on the Victory analogy, after victory in World War II, Churchill was unseated, as men could then face newer, more pressing matters than winning a war.

- Other reasons for its length are the interrelation between method and history, the fact that method is intimately tied to thinking, and the fact that scientific method is specialized for discovering new knowledge. Although it may seem that method could be further simplified or automated, and some progress has been made in this direction, the fact remains that so far, progress in science depends on talented people. It could be argued that scientific method allows for division of responsibility so that lesser-talented people can also contribute to science. However it takes insight for progress to occur in science. Lee Smolin has commented that the character of the researchers determines the direction of their results. The ambitions of Francis Crick and James Watson are exemplars of this fact. A quotation from Alhazen himself, on this page (the one that says "...God has not protected scientists from error...") shows this has been true for at least a thousand years.

- While investigating "Hebbian potentiation in Aplysia" I found "Enhancement of sensorimotor connections by conditioning-related stimulation in Aplysia depends upon postsynaptic Ca2+" which has a 14-word title, a 250 word abstract, and a six page article, freely available on the web. However, the article would take a layman weeks to understand, so this violates the 'intersubjective' requirement. The basics for 'verifiability' are simpler. Donald Hebb posited a mechanism for learning in 1949 which has served as a framework for subsequent research in neuroscience. Long term potentiation is a neurological phenomenon discovered in rabbit hippocampus; Eric Kandel realized that Aplysia, the California sea slug, which has much larger neurons, might be a better research animal than rabbits, mice, or macaques; Kandel got the 2000 Nobel prize in physiology or medicine for his work in neurobiology. As a hypothesis, LTP is thought to lie at the root of learning and memory. A host of researchers are at work on this, in multiple fields.

- The topic is not popular knowledge yet, but if it got into the article, perhaps it might become a lay topic.

- The general topic article for this is synaptic plasticity, which takes as thesis the idea that our neural dendrites undergo (reversible) physical changes when we learn.

durch planmässiges Tattonieren (through systematic palpable experimentation). —Carl Friedrich Gauss, when asked how he came about his theorems. Alan L Mackay (ed. 1991), Dictionary of Scientific Quotations London: IOP Publishing Ltd ISBN 0-7503-6106-6 Parameter error in {{ISBN}}: checksum

Perhaps more examples would better serve the article, such as mirror neurons

Giacomo Rizzolatti and Laila Craighero (2004), "The mirror-neuron system" Annual Review of Neuroscience 27: 169-192 (July 2004) doi:10.1146/annurev.neuro.27.070203.144230 Some history behind Rizzolati's discovery

One idea might be to publicize some simple (though non-obvious) observations which are reproducible, with constraints on the conditions. For example, if you are fortunate enough to have a newborn baby, during its sensitive period for this, just stick out your tongue at it, and your baby will imitate this. Older babies, children, and adults will not do this spontaneously. Human newborn babies and primate newborns have this capability in common, as shown in the image.

It is thought that mothers and fathers, while playing with their babies, have used the basis behind this phenomenon since time immemorial to teach the babies by imitation (i.e. call this hypothesis 1). Some speculate that difficulties with the mirror-neuron 'circuitry' may lie at the root of autism (call this hypothesis 1-1). As an example of scientific method in action, we have a surprising observation (the image), attendant hypotheses (1 and 1-1), predictions from the hypotheses (for example the mathematical model below), and suggested experiments (see below) to validate the concepts.

First images of a memory being formed, in Aplysia , the California sea slug.

Other examples for the article might be the hypotheses that mechanisms for synaptic plasticity of the chemical synapses lie at the root of learning and memory. There are now mathematical models for the shape of neurons' action potentials in the hippocampus, the amygdala, and other parts of the brain. There are general frameworks, such as learning based on Hebbian theory which have existed since the 1940s, and the research in neurobiology using California sea slugs has even yielded a Nobel prize for Eric Kandel. --Ancheta Wis (talk) 09:05, 12 June 2009 (UTC)

Perhaps an example from neurobiology might help the article. For example, if we were to discuss Hebb's rule as an example of a framework in molecular psychology to organize research on chemical synapses, and list some hypotheses which stem from it (such as long-term potentiation being the neural correlate of memory and learning), give some predictions (such as [http://www.pubmedcentral.nih.gov/articlerender.fcgi?artid=2246410 Philip Hunter (2008) "Ancient rules of memory. The molecules and mechanisms of memory evolved long before their ‘modern' use in the brain" EMBO Rep. (2008 February); 9(2): 124–126.

doi: 10.1038/sj.embor.2008.5.]) and experimental results from work on California sea slugs, then perhaps more interest might be stimulated in the article. --Ancheta Wis (talk) 12:43, 9 June 2009 (UTC)

I propose a second extended example, from neuroscience: LTP (long-term potentiation). There is enough out there that a popular-science book on synaptic plasticity has appeared which mentions LTP, but the topic is not widespread yet thirty-five years after its discovery by T. Lomo and T. Bliss. (ref for example Tim Bliss, Graham Collingridge and Richard Morris, editors (2004) Long-term Potentiation: Enhancing Neuroscience for 30 Years ISBN 0-19-853030-7 )

N. Volfovsky, H. Parnas, M. Segal, and E. Korkotian "Geometry of Dendritic Spines Affects Calcium Dynamics in Hippocampal Neurons: Theory and Experiments " The Journal of Neurophysiology Vol. 82 No. 1 July 1999, pp. 450-462

One can view scientific method as about our limitations; cognitively, we tend to 'fill in the blanks' or 'connect the dots' when faced with gaps in our understanding. S. T. Coleridge referred to this phenomenon as the "esemplastic nature of the imagination", in which we unify our current sensations, percepts, and concepts into a seemingly seamless whole. These aspects of wholeness have been called qualia. (ref V. S. Ramachandran (1998) Phantoms in the Brain ISBN 0-965-068019 Parameter error in {{ISBN}}: checksum )

Thus we have a subject with

- 1) a known framework which has existed since the 1960s

- 2) a hypothesis that LTP is the neural correlate and the foundation of memory (and perhaps learning)

- 3) a ton of predictions with

- 4) corresponding experiments

and even better, there is controversy over whether the hypothesis is proven, as of 2008.

The subject is huge, and even Nobel laureates have been outdistanced in the race for priority.

What say you? --Ancheta Wis (talk) 12:42, 4 June 2009 (UTC)

- Jsd, your thoughts are demonstrably inaccurate.

- You mis-attribute a quote to Tetrast. If might help if you read more carefully.

- You confuse mathematical physics with theoretical physics.

- Based on this confusion, you attack.

- You criticize a classical model from 2300 years ago and Peirce's model as not part of the article, when they formed it.

- --Ancheta Wis (talk) 15:37, 3 June 2009 (UTC)

"Ditto for Acton, Numerical Methods that Work. Are these methods not methods? Is computer science not science? Why should these not count as scientific methods?" -- from Jsd

- Jsd, Thank you for showing some of your sources. In general, the limitations of an algorithm, (the Numerical Methods) need to be considered along with the problem they are attacking. CS (computer science) needs to be distinguished from engineering, which is the general domain for numerical methods. In this case, the hypothesis is that the engineering has been done before the numerical run, meaning that the numerical run will behave in an expected way. How do you know the method will not fail, without a clear statement of the limitations of the method? I guarantee you that blind reliance on algorithm will run the system, blindly, until something fails (like the current state of the global economy) and the debacle is obvious to everyone.

- In other words, for a numerical run, the researcher has to have an expectation about the answer. Otherwise the researcher is flying blind, and we are confronted with the spectacle of a man with a machine and no insight. (This is a paraphrase of Richard Hamming#Quotes)

Tambays, I was surprised to note the omission of any Philippine in the Green Man article. From my readings, for example at a UCLA art exhibit, I learned that way before the arrival of the Spanish, vegetation figures (carved of ferns etc.) were set up to scare off outsiders and to mark territory. Does anyone have additional information? --Ancheta Wis (talk) 17:56, 26 May 2009 (UTC)

I propose another example, long term potentiation (LTP); like DNA, it has a long history, with roots extending back to the nineteenth century, at least as far back as Ramón y Cajal's research. One of the advantages of presenting LTP as an example might be that LTP rests on the DNA example, and might serve as an illustration of the interconnectedness of the scientific enterprise. An LTP example could illustrate the hard work that one must perform in the service of science, such as the establishment of terminology, setting scope, understanding of technique and underlying technology (such as mouse physiology), and could also illustrate the lure of attractive analogies, such as that between LTP and long term memory. --Ancheta Wis (talk) 11:48, 20 May 2009 (UTC)

- , if 'the science is the science', meaning that the science we discover need not coincide with our previous beliefs about a subject, but that the truth which we discover is what we have to live with afterward, then this is a Pandora's box for humankind. For example, in physics, what we are learning is that the world we live in was fantastically improbable; that we live in a world far from equilibrium and that we are doomed to return to a cold, bleak nothingness, but also that we came from the heavens; that we are literally made from the stars.

- Whewell thought long and hard about method.

Here is some data about risk in computer models. Overreliance on value at risk was a factor in the global financial crisis. --Ancheta Wis (talk) 15:06, 3 March 2009 (UTC)

One concern about the operational paradigm which comes to mind is the separation of magic from science. The separation occurred in the West during the seventeenth century (p. 86, Joseph Needham, Colin A. Ronan, The Shorter Science and Civilisation in China). What is to keep some gullible government agency from funding a completely unfounded speculation, rather than funding a proposal whose prpjections are firmly grounded in scientific experiment? An administration seeking more bang for the taxpayer buck might select the proposal promising the greater projected return on investment, rather than the increase in understanding of a topic. It takes vision to make such a selection; the current state of the art in business, for example, is the spreadsheet, which takes quarterly results, finds a mathematical expression which embodies those results, and simply projects them forward. It doesn't take too much imagination to conjure up a mess, which is the state of our global economy today. What is to keep debacles in mathematical modeling from recurring, given the primitive state of the models? If a paradigm ever needed reexamination, it would be the blind use of models in place of the understanding, the comprehension of an issue. One might argue that blame for the current state of our global economy rests on the mathematical wizards who uncritically implement the mutterings of their analysts. --Ancheta Wis (talk) 14:39, 3 March 2009 (UTC)

The novel is written in crosscuts, starting with Belknap, crosscutting to Bancroft, back and forth to the climax with a pessimistic denoument. Andrea Bancroft is the daughter of a Bancroft whose divorced wife worked for the Bancroft Foundation. Andrea works for Coventry, a hedge fund; her intellect shows her to be a worthy trustee of the Bancroft Foundation, whose head is Paul Bancroft. Paul shows himself to be an attractive figure to Andrea, who plays basketball with Brandon to prove her worthiness for the trusteeship at the foundation. Unfortunately, Paul indicates he believes in the greatest good for the greatest number and has the financial strength to back his cause. Andrea is attracted to the idea, but not to its logical consequence, Theta Corporation. Theta uses a supercomputer in Research Triangle Park to calculate the number of people who would benefit when a key person is terminated. Paul must approve these actions to increase the greatest good.

Brandon is Paul's son and intellectual equal. Unbeknown to anyone until the climax, Brandon is attempting to destroy Theta with an internet presence, Genesis.

Mike Garrison retrieves Belknap's security clearance, forcing Belknap to go it alone. Belknap asks a friend to bug Andrea's house, who discovers the house is already bugged, and is assassinated himself. Belknap asks help from another CIA operative to tell him about Genesis; during the meeting, his CIA friend is killed by a sniper.

Belknap is travelling the world to discover who has captured Pollux, who is his only constant friend, having lost his wife and girlfriends to spy operations. Belknap discovers he is on a list of assassinated people, after he has killed several hit squads, and that there is a link to Theta.

Castor tracks down a Genesis candidate and discovers that Lugner has been hiding in Estonia; this makes Pollux, Castor's only supposed friend, a fraud. Andrea, in the meantime, has run into Bancroft (Castor) and is attempting to flee to him after being forced to kill two employees of Theta in the records section of Iron Mountain, to save herself, for the first time. Andrea is drugged and rescued by Belknap where they return to the US.

- I have never seen a solution except for the Globe in the upper left hand corner of this page, probably hard-coded to get to the Main page. If anyone solves this problem, it will have an enormous effect for good on the encyclopedia. --Ancheta Wis (talk) 14:13, 25 January 2009 (UTC)

I was going to add the Crab Supernova of 1054 as an example of observer bias, because Britannica notes that no European scientific records of the supernova exist, although Chinese, Japanese, Arab and possibly Native American astronomers record the event. In fact, the star was visible during the day. How could this be? That's when I got discouraged about entering it in the article, because of OR concerns. One plausible explanation is that when the Crab Supernova was visible, it appeared in the celestial sphere of the Moon, which was supposed to be perfect in the European mythology, as opposed to the imperfect terrestial sphere which man inhabits. Thus comets must be in the terrestial sphere as they are harbingers of evil, etc. But if the Crab Supernova was in the sphere of the Moon, that was impossible. Q.E.D.

I agree that this makes no sense. And I don't have a citation for this example of bias. --Ancheta Wis (talk) 03:54, 4 January 2009 (UTC)

- Citations are definitely in order here. It is unfortunate that the literature of the ipl site you list above refers to concepts which have little credibility in our current time. If you look at the theory of the Chinese seismometer from 124 CE, it refers to dragons etc. We have been trained to disregard those concepts and some bridge articles to translate them to more palatable terms are probably in order. When Newton framed the mechanistic view of the world he moved world civilization away from the unobservables, while still keeping the work of the Ancients, such as Apollonius, Archimedes, etc. Their work survives in the current edifice called Science. But not dragons. What the article needs is concrete advances, their citations, and perhaps some sub-articles. But there is a difference between technology and science. The Four Great Inventions are technology. If you want a starting point, Thales' speculation on the nature of matter is considered by some to be the beginning of science. Now I am not an expert on the corresponding Chinese literature, but where are the citations for the analog of Thales? I am aware that air or chi is very important to Chinese civilization, to the extent that a mother will blow on a child's hurt to soothe it, but where is the literature that speaks to the phases of air, in a similar way as Thales' phases of water? --Ancheta Wis (talk) 21:28, 22 December 2008 (UTC)

- And yet, we are still subject to free-fall (motion along the geodesic) when Earth looses its grip on us. It is possible to experience this on Earth's surface,

- for example on a seashore, standing in the surf, as a wave crashes over our legs; we stand on the sand, upright, while the ocean rips away the sand beneath us; we fall through the sand, sinking toward Earth's center, until our feet arrive at sand which remains under the grip of gravitation, and which has not yet moved due to the ocean wave. As the ocean wave recedes, our formerly free feet are now buried in sand. But can you imagine, if more sand had been washed away, beneath our feet, we would have fallen even further during our descent through the sand! If you want to reproduce this, try a barrier island such as Cape Hatteras, North Carolina and just walk through the surf. The sand there is loose enough to be easily subject to the waves of the Atlantic Ocean, irrespective of Man's attempts to stabilize what are basically sand dunes in the ocean.

- Other well-known examples of the result of inertial motion are the feeling we get as an elevator starts its downward motion and

- The feeling we first get during an earthquake, when Earth suddenly moves down with respect to the former surface. We are so used to Earth's gravity that we do not realize that we sense it all the time with the organs of balance in our body. This is an aspect of the sense of proprioception, part of the somatosensory system.

- And yet, we are still subject to free-fall (motion along the geodesic) when Earth looses its grip on us. It is possible to experience this on Earth's surface,

- This exchange of views between editors has actually clarified in my mind what we term inertial coordinates:

- we first isolate the part of the system which is in inertial motion

- we compare to the part of the system which is subject to other forces (we write down the geometry of the respective parts of the system, inertial versus non-inertial)

- we write down the forces (ala D'Alembert's principle)

- we solve the motion

{{RFPP|full|1 week|<reason>}} Inward Bound: Of matter and forces in the physical world ISBN 0-19-851971-0

| Name | First operational | Numeral system | Computing mechanism | Programming | Turing complete |

|---|---|---|---|---|---|

| Zuse Z3 (Germany) | May 1941 | Binary | Electro-mechanical | Program-controlled by punched film stock | Yes (1998) |

| Atanasoff–Berry Computer (US) | Summer 1941 | Binary | Electronic | Not programmable—single purpose | No |

- ^ According to the law of the diocese, Sanches would have been baptized with 9 days of his birth. Sanches, Limbrick & Thomson 1988, pp. 4–5

- ^ Francisco Sanches (ca 1551-1623) Filósofo, matemático e médico - Biblioteca Nacional de Portugal (in Portuguese)

- ^ See, for example,Horowitz & Hill 1989, pp. 1–44

- ^ Norden

- ^ Singer 1946

- ^ Phillips

- ^ (in French)Coriolis 1836, pp. 5–9

- ^ The noise level, compared to the signal level, is a fundamental factor, see for example Davenport & Root 1958, pp. 112–364.

- ^ Ziemer, Tranter & Fannin 1993, p. 370.

- ^ The publication of Two New Sciences by the House of Elzevir in Leiden, the Netherlands, could ignore any censure by the Index Librorum Prohibitorum which was only disestablished in 1966. See: Andrew Dickson White (1896), A History of the Warfare of Science with Theology in Christendom

- ^ Rod Burstall, "Christopher Strachey—Understanding Programming Languages", Higher-Order and Symbolic Computation 13:52 (2000)